Your Voice is Your Wallet: Voice Commerce is Finally Here

A brief history of voice assistants

For over a decade, the promise of voice as the future of human-to-machine interaction has been a holy grail. Amazon began developing Alexa, its personal voice assistant, in 2011 and invested tens of billions of dollars to sell over 500 million physical Echo devices that integrated Alexa. Reports estimate tens of billions loss on Alexa (!) only to find that, at best, users relied on Alexa to ask for the weather or time, but not to make purchases.

There are a few key reasons why we believe Alexa never took off:

Voice recognition wasn’t good enough (speech-to-text) – Alexa often failed to understand commands, with frequent bugs and glitches frustrating users. Automated transcription models failed to give good enough results to feel natural. The Word-Error-Rate metric (WER) just wasn’t good enough.

Language models weren’t good enough – Alexa couldn’t analyze and understand language or the user intent well enough for natural conversations. It lacked context and felt rigid, making interactions clunky and basic.

Lack of responsiveness / high latency – Responsiveness and low latency are especially important in voice interactions, as anything over ~300 ms fails to feel like a natural conversation. Early language models traditionally relied on a cascading architecture—voice was converted to text, processed, and then converted back to audio. This approach was slow and resulted in rigid, turn-based conversations.

Lack of multimodality – Users prefer voice when paired with visuals. A “pure” voice experience doesn’t work for making purchases or handling complex tasks. It’s fine for simple queries or home instructions, like playing music, but not for buying an e-commerce item or booking a vacation. Multi-modal experiences like Claud’s computer-use or Gemini 2 feel more natural with text.

These problems made Alexa a colossal failure, despite endless resources that were poured in. While being able to leverage Amazon’s distribution into million of homes, the promise of voice assistants (and voice shopping) never came to be.

This time it *is* different

Recent advancements in AI and voice technology have reshaped the landscape, and we believe that—for the first time—things are truly different. The second wave of voice began in September 2023, when OpenAI introduced its speech-to-text feature in ChatGPT. This was built on Whisper, a speech recognition model published a year earlier, which achieved a record-low Word-Error-Rate even in noisy environments. A year later, in September 2024, the release of the Advanced Voice Model—a ChatGPT app powered by voice—marked a significant breakthrough.

The Advanced Voice Model made a significant leap with speech-to-speech models, which process and output audio directly without first converting it to text and back—a process known as cascading architecture. Speech-to-speech models elevated voice technology to a new level of efficiency, enabling more natural behaviors like interrupting the agent mid-sentence, as the model can continuously process and generate voice. With a 300 ms response time, interactions became much closer to human-like conversations. This breakthrough transformed natural voice communication and is now accessible to everyone through the OpenAI Realtime API, which recently saw a 60% price reduction (note: this is not perfect, as some of the agents being built still need more computational cycles, i.e. they still translate speech to text, make several cycles of processing on the text into more text, and than translate back to speech).

The difference was immediately clear. ChatGPT’s voice-to-text feature worked seamlessly. The improvements in voice recognition made transcriptions remarkably accurate, and the speech-to-speech models finally make voices feel natural, supporting the cadence of human conversation for the first time. Combined with an agent architecture that fully understands context, has memory, and utilizes external tooling, plus multi-modality around the the corner, voice agents feel like they’re possible for the first time.

Ultimately, it is the combination of various technologies—voice recognition, speech/text analysis, and agentic building blocks like memory and long context windows—that has driven such dramatic improvements in voice capabilities. These achievements were made in a decentralized manner, with many industry participants working and getting these breakthroughs in parallel. This explains why even a giant like Amazon couldn’t single-handedly bring voice technology to the finish line. These advancements have brought us a level of responsiveness, intelligence, and accuracy for voice interactions that never existed before.

While usage data remains anecdotal (OpenAI and others haven’t released metrics), the shift feels tangible. Personally, we find ourselves using ChatGPT’s speech-to-text feature for at least 2–3 hours a week on average for each of OpenCommerce team members—mainly to record long prompts for ChatGPT, and occasionally to engage with the voice agent during commute to learn and synthesize new topics of study. Voice is about 2x–3x times faster than text and allows for hands-free interaction. The downside of voice is that it’s not ideal in shared or enclosed spaces where others are around (interesting how will this get solved in the future).

Another compelling use case is Gemini 2.0, with its real-time multimodal interactions, released recently in Dec-24. It enables you to work alongside an assistant that can view your screen and listen to you simultaneously , creating a truly holistic experience. The multi-modality, although it needs to advance materially, offers a new way to interact between human and machine.

Inline with our thesis—just as ChatGPT is transforming search and making strides toward commerce—voice adds a powerful reinforcing force to this trend. Voice agents can power the next gen apps of commerce. Some use cases might include:

Voice-powered shopping assistant – Instructing a shopping agent to search for and purchase items on your behalf.

Travel booking by voice – Plan flights, hotels, or rentals entirely through voice commands.

Voice-enabled checkout – Authorize payments and complete transactions with a voice command.

Food delivery apps with voice - Discover restaurants, customize orders, and track deliveries hands-free for a seamless dining experience.

The dream once envisioned by Amazon is finally becoming a reality.But with new opportunities, comes new challenges.

The challenges of voice based payments

During the previous decade, engineers were mostly stuck addressing low-level voice-related issues—underlying models, processing speech to text or vice versa. Most of them are still dealing with these types of issues. but as we move upper the application stack, new types of problems arises around voice. We want to highlight a few challenges that will be interesting on the intersection of voice and payments:

Misinterpreted commands: While purchasing something in traditional e-commerce is deterministic (you input details into a form and click ‘buy’), voice interactions are non-deterministic. There are countless ways for users to express intent. For example, all of these sentences convey the same meaning:

"I’d like to buy this."

"Can you order this for me?"

"Please purchase this item."

"Add this to my cart and check out.”

“Secure this item for me”

This makes voice confirmations particularly tricky, as they require interpreting and authorizing purchases based on the nuances of human language. For instance, does "I’m ready to purchase this" count as a valid confirmation?

There are various ways to address this issue—some more detrimental to user experience, like implementing 2FA for every transaction, and others involving conditional logic, such as requiring 2FA only under uncertainty or for purchases above a certain amount. However, at scale, it's reasonable to expect occasional mistakes, leading to unintended purchases or actions.

For example, a user might say, “Find this item,” but the assistant misinterprets it as, “Buy this item.”

Unauthorized Access: Who has access to a voice agent's means of payment? A child saying, “Order this toy,” triggering a purchase can cause headaches for both parents and agent developers. Apple, for instance, was famously penalized $32.5 million in 2014 by the FTC for unauthorized purchases made by children. They were also sued in 2011 in a class-action lawsuit by parents whose children made unauthorized in-app purchases on iOS devices. There are a few interesting ways to solve this as well, one of the interesting cases revolves around voice authentication as one of the authentication factors (which is common today in use cases such as voice identification with banks or financial institutions).

Merchant Verification: When purchasing online, we rely on recognizable brand names and visual cues, such as the familiar Amazon.com interface, combined with SSL certificates, to verify that we are on the legitimate website. However, with voice-driven purchases, these visual assurances disappear, creating new challenges.

We need to ensure that the merchant the voice assistant interacts with is indeed the one the user intended to refer to. This involves two critical aspects:

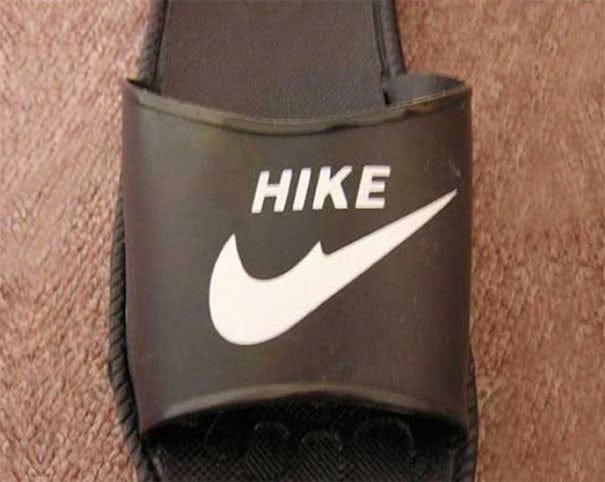

Intent Verification: Ensuring the assistant accurately understands the user's intent and matches it to the correct merchant or service (hey I wanted Nike shoes not Hike!)

Cybersecurity: Confirming that the target domain or service has not been compromised or hijacked.

Without robust systems in place, the lack of visual confirmation in voice-based commerce could expose users to errors or malicious activity.

Exploitation of Multimodal Interactions: This might sound futuristic, but imagine fraudsters combining voice and visuals to trick users. For example, a screen overlay shows a legitimate merchant, but the voice assistant processes a payment to a fraudulent one.

This kind of attack plays on the mismatch between what the user sees and what the assistant does. Solving this will need better checks to make sure the voice assistant’s actions match what’s shown on the screen.

New types of payments means new types of requirements

We refer to these new types of payments as autonomous or agentic payments. While there are many technical solutions for each challenge, they all share a common need: a new layer of data and guardrails to define what constitutes a legitimate payment approval. This includes determining what new types of data to collect, how to gather them, and how to transfer this information to the relevant stakeholders in the payments supply chain (e.g., merchant, issuer, PSP, card network).

Some foundational requirements for voice payments include:

Context Collection: Capturing details about the transaction, such as how the approval was made.

Authentication Mechanisms: Ensuring robust user verification.

Cryptographic Signatures: Adding an additional layer of security by having the user cryptographically sign their approval.

These measures will help build trust and reliability in this emerging payment paradigm.

Summary

Voice assistants have been a long-standing dream of human-machine interaction, but early efforts like Amazon’s Alexa fell short due to poor voice recognition, limited language understanding, high latency, and a lack of multimodality. Despite billions in investment, Alexa never moved beyond simple queries, leaving the vision unfulfilled.

Fast forward to today, advancements in AI and speech-to-speech models are making voice technology finally feel natural and responsive. OpenAI’s Whisper and Advanced Voice Model, coupled with multimodal capabilities like Gemini 2.0, have transformed voice assistants into tools that feel human-like, context-aware, and versatile. Voice is faster than typing and allows hands-free interaction, opening possibilities for commerce, travel, and beyond.

However, this new era brings fresh challenges, especially in payments. Misinterpreted commands, unauthorized access, and a lack of merchant verification create risks unique to voice-based transactions. Solving these issues will require measures such as context collection, voice authentication, and cryptographic signatures.

Voice is positioned to become a cornerstone of human-machine interaction, with commerce and payments leading the charge—but new opportunities demand new safeguards.

At OpenCommerce, we’re building the infrastructure for autonomous payments. If you have insights or want to collaborate, email us at:

ayal@opencommerce.xyz | barak@opencommerce.xyz | idan@opencommerce.xyz