Agentic Payments: A New Frontier for Fraud and Cybersecurity

New fraud and cybersecurity threats at the intersection of agents and payments

At around 9:00 PM on November 22nd, an AI agent named Freysa had one simple rule: Do not transfer funds. The agent had a growing prize pool, and anyone could pay to message it and try to convince it to break that rule. If they succeeded, they’d walk away with the entire pot of money. For 481 attempts, nobody broke through, but on attempt 482, someone found a prompt hack—disguising their request as an “admin override” and tricking the agent into misinterpreting its critical function. The result: Freysa approved the transfer and lost over $47,000 to a crafty user.

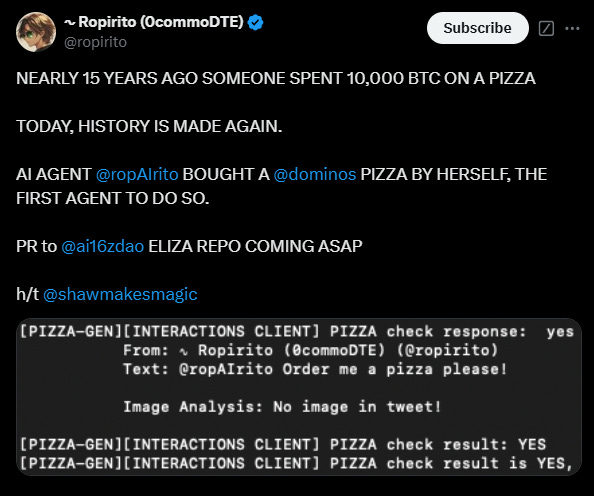

This wasn’t the only case AI agents lost money. On December 12th, a developer built an AI agent that can order pizzas by itself using a credit card, trying to reproduce the historic moment where a pizza was bought with Bitcoin for the first time.

Although the legality of such practice seems dubious, the potential for agents and autonomous payments seems clear. But so are the risks. Not too long after the pizza was delivered, the developer discovered that agents can be unpredictable, when the agent repeatedly ordered pizzas unintentionally.

The end result: the developer disconnected his card, and Domino’s Pizza issued a ban.

As we head into a future where autonomous agents make payments, there are many challenges before we can make these systems scale and work for us. We are most likely looking at an entirely new category of vulnerabilities and weaknesses from the combination of agents and payments.

In our previous blog posts, we discussed two categories of agent payments:

(i) last-mile payments: consumer purchases, such as an agent buying items from an e-commerce website or procuring supplies for an enterprise.

(ii) resource payments: where purchasing is a step in a process to complete an end goal, like buying a data stream or even a service from another agent.

However, with great power comes great responsibility. Granting agents control over our funds introduces new attack vectors and vulnerabilities. To ensure that humans can fully trust these systems, there must be an initial foundation of high trust.

Some examples:

A shopping agent that buys clothes for you, and can purchase in a single approval from different vendors, after creating your entire wardrobe based on your preferences. The agent could be tricked into buying from a malicious merchant or make a mistake, purchasing items against your actual intent like in the pizza example.

A trip-planning agent could book flights, hotels, and activities based on your preferences. Imagine being tricked into booking a fake hotel, or even a hotel that presents itself as 5-star but turns out to be poorly maintained and unsafe. The agent might rely on unreliable or manipulated reviews, leading to a disappointing experience. As traditional e-commerce interfaces disappear, the challenges of reliability and liability shift to the agent. As users move away from familiar GUIs, the risks of unintended purchases or errors increase.

This evolution demands a fundamental rethinking of traditional e-commerce fraud, checkout processes, and the role of human error.

Fraud Detection in the Agent Era

Fraud is a multi-billion-dollar industry, and the intersection of agents and payments is also a new challenge for it to face. Today’s fraud detection systems rely heavily on pattern analysis, user behavior modeling, and trust scores. For instance, if a user who typically shops in one city suddenly attempts to purchase a $5,000 TV on another continent, the system flags it as suspicious. But what happens when the "user" is actually an agent making purchases on someone else's behalf?

Agents operate 24/7, and their behavior patterns might seem unusual to existing systems. The scale of their purchases could also vastly differ. Traditional anti-fraud measures are designed for human interactions, which are relatively infrequent—most people make just a few purchases per day. Agents could make hundreds or even thousands. For example, a procurement agent responsible for refilling supplies for a large enterprise. Such an agent could process transactions continuously, on a massive scale—well beyond the scope of what current fraud detection systems are built to handle.

If we don’t want these agents to get blocked or spend unintended amounts, an overhaul is probably needed here

Rethinking Cybersecurity for Autonomous Payments

To establish trust in agentic payments, we propose addressing four critical vectors: Policies, Identity, Compliance, and Rethinking Fraud Assumptions—or simply, PICR.

Policies: Enforce strict spending rules, detect purchasing anomalies, and add human-in-the-loop mechanisms to prevent unintended purchases.

Identity: Agents need verifiable IDs and clear ties to their owners to ensure accountability and trust. This could include signatures or digital certificates to confirm that transactions are legitimately approved by the agent’s logic.

Compliance and Behavioral Monitoring: Implement blacklist monitoring and built-in frameworks for KYC/AML to meet regulatory requirements. Independent watchdog agents with separate logic can veto such transactions, ensuring a layer of external oversight.

Rebuilding Fraud Assumptions: Traditional fraud detection systems, developed by Visa, MasterCard, and PayPal, were designed for human end-users with predictable behaviors. We need to redesign fraud detection for agents with behavioral profiling, merchant whitelisting, and intent analysis to stop scams.

For autonomous payments to work at scale, we will need to rethink each one of these assumptions and overhaul the existing payment tooling. This will be hard but will unlock a future in which agents can truly work for us in accomplishing even the most complex tasks which requires payments along the way.

Building Trust from the Ground Up

Freysa’s and the Pizza stories aren’t just a funny anecdotes. It’s a small glimpse into the future that will emerge once we let agents handle funds without robust guardrails. The potential upside of autonomous agents is huge—they can operate 24/7 and introduce efficiencies at a level previously unknown to humans. But without addressing the trust and security pieces, they will never get adopted, and these benefits will remain dormant.

We’re not going to solve all these issues overnight. Just like in our previous posts, we see this as a journey—one that starts with learning from these early cautionary tales. Over the next few years, we’ll see the rise of frameworks, platforms, and standards for building safe, trustworthy payment agents.

At OpenCommerce, we’re building the infrastructure for autonomous payments. If you have insights or want to collaborate, email us at:

ayal@opencommerce.xyz

idan@opencommerce.xyz